What makes the difference in data rates between FDD and TDD ?

For the description:

Due to the differences between

FDD and TDD, the same amount of aggregated bandwidth results in different downlink and uplink data rates.

Therefore, 40-MHz aggregated bandwidth in FDD-LTE results in a maximum downlink data rate of 300 Mb/s,

while 40 MHz aggregated in TDD-LTE results in a maximum rate of 224 Mb/s

As shown above, obviously,

the data rates of FDD(Frequency Division Duplexing) is higher than which of TDD.

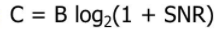

Nevertheless, according to Shannon Theorem

I don?t know what makes the difference.

What keys words should I google ?

Thanks~!

FDD means both transmitter and receiver are operating at the same time (all the time) over same/different frequency band, whereas in TDD only one will be active at a time. So the average data rate over a fixed time interval for a given bandwidth will be more in FDD than that in the TDD mode.

And shannon theorem gives the maximum data rate possible, which means the operating data rate, whether FDD or TDD, can never exceed that limit.

FDD it is a full-duplex transmission (RX and TX are ON in the same time) when TDD is half-duplex (RX and TX are ON in different time slots).

So, TDD has less overall throughput than FDD, but have the advantage that use only one channel frequency for both, RX and TX.

FDD needs two channels (one for RX and one for TX).