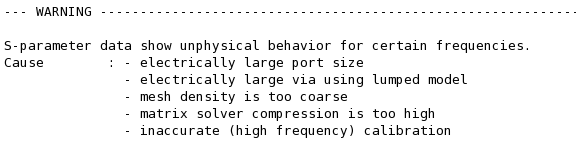

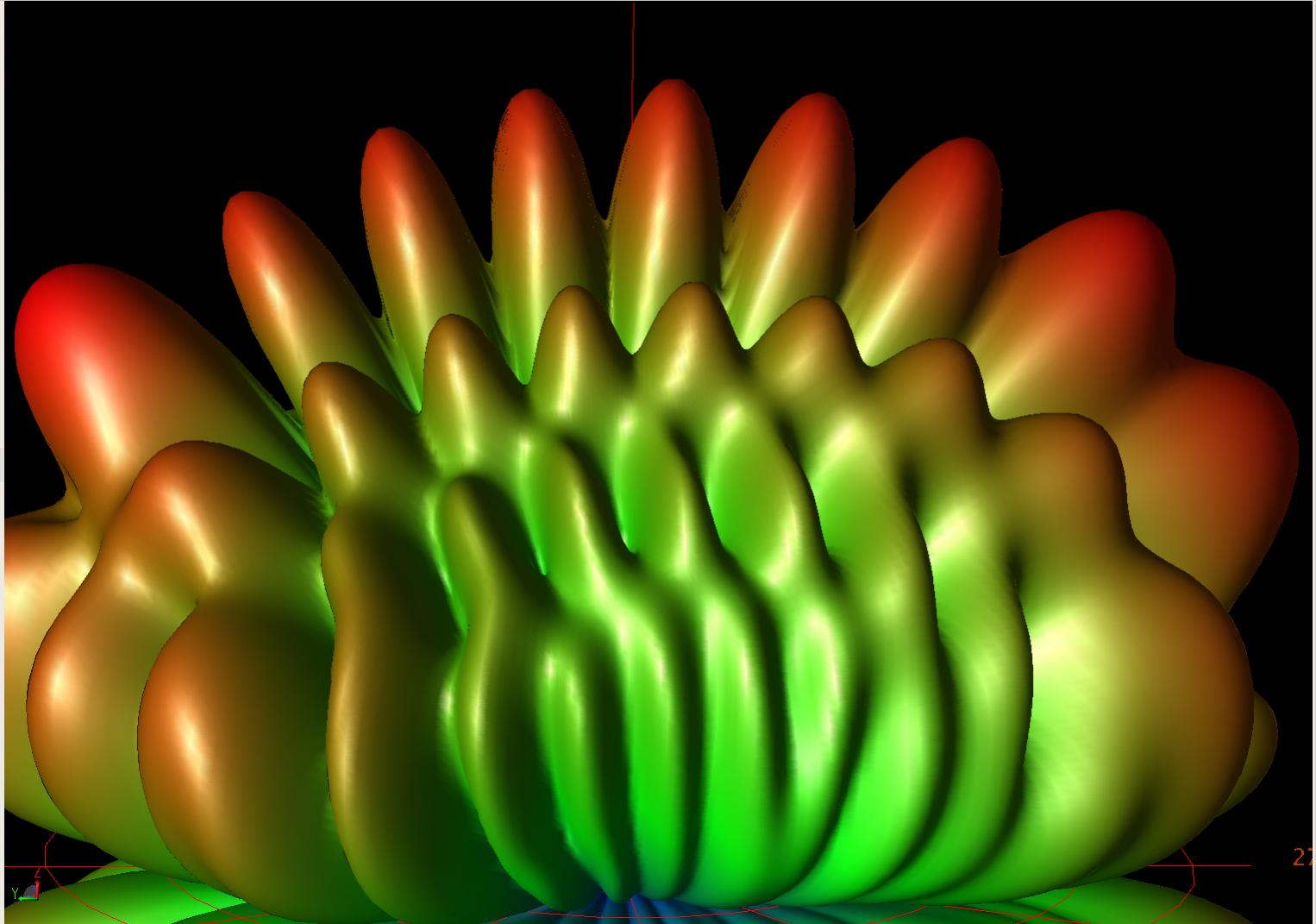

Distorted radiation pattern for antenna array in ADS

I am using ADS 2016 and to reduce the time taken for each simulation I have reduced the Mesh Density (cell/wavelength) to 5 and the solver compression level to "Reduced". Do these changes anyhow distort the radiation pattern I get as an outcome of my experiment?

I can observe from the figure below that I have grating lobes and to reduce them I need to lower or equal the inter element spacing to λ/2 mm. I am working with an inter-element spacing of 1.9 mm.

Is the problem below a problem with my design and concept or some issue with the software which I am not understanding?

I am attaching the file below.

Any sort of help will be much appreciated.

Why?

Mesh density controls the possible current distribution, and 5 cells per wavelength (with linear current gradient on each cell) is not enough to represent the correct physical currents flowing on your circuit.

A common default value is 20 cells/wavelength. For antennas where accuracy is not as critical, 10 cells/wavelength might be enough.

How critical is the effect of the solver compression level in the antenna simulations which I am performing?

I never changed that setting because my machine has 48GB anyway, but just looking at the setting now: is this really what you want? Doesn't reduced compression take more memory?

qthelp://ads.2016.01/doc/em/Defining_Solver_Settings.html

"Doesn't reduced compression take more memory"

I dont think so. may be the higher I go the more complicated its for the system to provide the performance. As if I set the solver compression level to normal the system always gives me this warning and I receive an error in performance.